AI Is a Tool, Not a Creative Solution

“What you men call ‘work life’ is full of flattery and peer pressure. I can be part of those, too. I’m just appalled by them.” – Yeo Mi Ran, “Love to Hate You,” Netflix, 2023

Okay, who or what will write AI’s biography in the video content (movies/shows) industry?

Will it follow the storyline of Ex Machina, Her, Terminator 1& 2, A.I. or … ?

Studios, streamers and content owners aren’t thinking that far into the future.

They’re 100 percent focused on next quarter’s/next year’s results.

To achieve results, they are intent on embracing the innovations that will enable them to stay ahead in a very competitive, rapidly changing industry.

Right now, AI is a promising tool that will enable them to develop, create and deliver films/shows that audiences want to see more efficiently and more effectively.

The technology has been poking around the industry since it first appeared in Metropolis in 1927. It has been getting bigger, better, more refined and more involved but we still don’t have a clear idea (be honest) about what it’s going to do for us other than “Gawd it’s gonna be great!”

The technology limped/lumbered along doing stuff – crunching, grinding numbers and data – the hard way over hours and days until Jensen Huang’s Nvidia introduced the first GPU (graphic processor unit) in 1999 to make secret government visualization work easier, faster, better and more photorealistic.

Spook stuff is nice, but Huang and his folks wanted to have fun (and make money), so they made bigger, better GPUs for the video game industry. Not the kind of stuff you play on your iPhone for hours but for big hummer computers like those a couple of friends build from the frame up so they can play ultra-realistic games for days on end.

As computer power grew, so did its ability to handle vast amounts of data and the need for more data to do more things.

Smart Use – Netflix has been sifting through, sorting and prioritizing viewer data–not to sell it to outsiders but to give them an edge on sucking viewers in and keeping them involved with their content. The data also helps them shape content and projects to attract more subscribers.

Netflix and the other streamers solved the difficulties of distributing any content around the world as well as deliver just the content the viewer wants in the blink of an eye (O,K., faster).

Working closely with SMPTE (Society of Motion Picture and Television Engineers), Netflix developed codecs (encoder/decoder) that would enable high-resolution video to be sent over the internet for people to enjoy.

To minimize the time/frustration in finding just the show people wanted to watch, they used GPUs and AI to use viewer data to recommend shows/movies folks would most likely enjoy. The more data available/used, the better the recommendations, the happier the viewer.

Content creators see the GPU and AI as opportunities to make video stories that are more realistic, more immersive, ones that more emotionally involve the viewer.

Commenting on AI in video creation, Ted Sarandos, co-CEO of Netflix during a recent earnings call said AI will produce a number of creative tools that will enable creators to tell better stories, noting that “there’s a better business and a bigger business in making content 10 percent better than making it 50 percent cheaper.”

That’s true but studio executives, content owners and streamers see the potentials of the technologies as opportunities to do both.

Allan McLennan, former CEO of 2G Digital Post and now CEO of PADEM Media Group, has been instrumental in localizing vast amounts of excellent international content economically available to people around the globe with AI-enabled localization tools.

Global Views – Streaming broke down country borders to show you like our stuff and we like your stuff as long as we can understand what is being said. AI-enabled localization (subtitles or dubbing) has gotten better, faster and cheaper.

Fast, accurate, economic localization has expanded our viewing horizons to projects created in Germany, France, Africa, Saudi Arabia, India, Japan and elsewhere.

It doesn’t really matter if it was carefully dubbed into English – or your native language – or if it has subtitles, the technologies have broken down the time/cost barrier for people to watch, enjoy, understand and appreciate the video stories as they unfolded.

But the AI-enabled globalization has come at a cost.

The GPU-powered solution is faster, more accurate and more efficient than human facial manipulation and voice translation.

It is even less expensive to add subtitles and still enjoy the richness of the dialogue and follow the project.

McLennan noted that the role of the film/show translator and visual artists have changed from one of long hours of detailed facial/voice translation to one of ensuring the accuracy and flow of the content.

Similar changes can be expected throughout the content creation/production cycle. But change and adjustment have been constant throughout the history of the film/show industry.

A month ago, we took a break and went through the Walt Disney Family Museum in San Francisco’s Presidio.

Animation – Early animation films took months/years to produce with each cell painstakingly painted by an animator/artist. Computerizing the work reduced production time, increased quality and opened the door to great entertainment. No one really wants to go back.

It’s difficult to believe that animation artists spent hours carefully creating each of the cells that were needed to produce a 10-15 minute short.

Living Art – Pixar and other animation studios around the globe have blended together computer power, GPUs and powerful software to make projects more interesting, inviting and less expensive to produce. AI-enabled solutions will improve the quality, lower the cost and impact the number of people involved.

Today, the 6000 plus members of the Animation Guild are able to use Nvidia GPUs and other massively parallel computers and image rendering technologies to create photo realistic feature-length films in a fraction of the time and cost.

But it’s the next technological leap they – and the other unions – DGA (Directors Guild of America), WGA (Writers Guild of America), SAG-AFTRA (Screen Actors Guild – American Federation of Television and Radio Artists) and other film/show unions want to see stronger regulation on the use of AI.

After all, Jeff Katzenberg, former Disney and Dream Works chief, has said that AI could eliminate as much as 90 percent of the cost of making an animated film because even with high performance computers and advanced creative software, the projects are still labor intensive.

The challenge is to reach a balance between Wall Street’s and shareholders demands (requests) to reduce the number of false start projects and reduce the cost of projects.

Netflix and other studios/streamers have made excellent use of their growing volume of viewer/subscriber and viewing data as well as AI and LLM (large language model) technologies to gain a greater understanding of what projects, storylines and segments capture and keep viewers and which don’t.

This helps everyone in the industry – directors, producers, scriptwriters, actors, cinematographers, postproduction and marketing to understand the audiences, their preferences and trends to help guide and create unique, engaging movies and shows.

Empty Room – AI can do a lot to make movies/shows better and less expensive to produce but it can’t add the intangible elements that get people to sit down and watch for hours on end.

After all, no one in the industry wants to work on or be involved with a project that no one watches.

AI developers/promoters as well as studio executives/investors are adding the technology where it can deliver even more results.

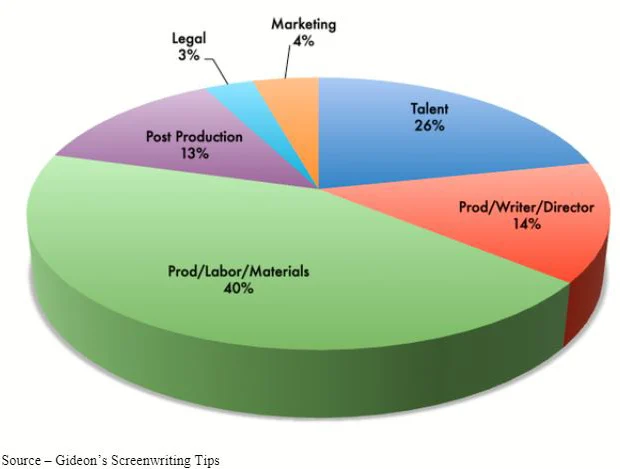

Project Budget – Like it or not, every film/show has a budget with certain items such as above the line folks and producers that are relatively firm. It is only in the below the line areas where creators can reduce costs by automating certain tasks.

The average project budget is relatively the same whether it’s a tentpole or “standard” film. Roughly 20-30 percent of the budget is allocated to above-the-line talent costs, 50 percent is budgeted for below-the-line costs and 20-30 percent is invested in post-production.

AI is being increasingly used in pre- and production areas such as script analysis, cast/shot selection.

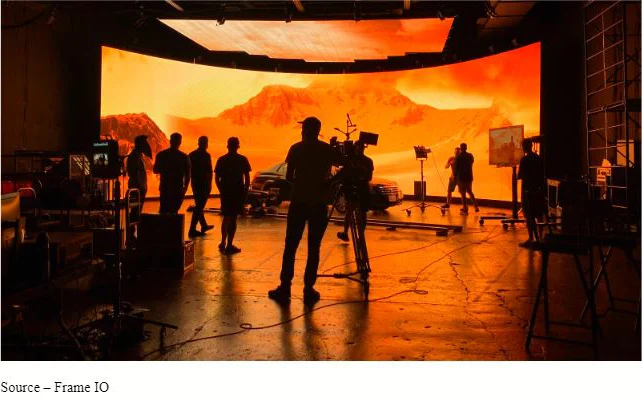

Virtual Production – LED virtual production sets are being installed and used around the world because they make films/shows easier, faster, more economic and more “real” to produce.

Virtual production facilities have emerged as a valid and viable middle ground application that takes advantage of AI advances while giving filmmakers the ability to let their creativity and imagination take wing.

VP significantly reduced production cost by minimizing the need for location shoots, set construction and other crafts work while still preserving the essence of creative content development/production This reducied art and construction costs by as much as 30 percent while saving an estimated 60 percent in VFX and post-production time and expense.

The facilities and tools have also had a significant (positive) impact on the depth, breadth and cost of developing shows and movies that could only be imagined a few years ago.

AI technology in tools from Adobe, Avid and other software suites providers are increasingly being used during the production and postproduction of projects to handle time-consuming, mind-numbing work that is tedious but necessary to put the project to make it not just palatable or good, but great.

The solutions enable automated audio/video editing, generative images, special effects and other post work to not only deliver the final work more rapidly but also allows producers and editors to quickly and economically examine scene and workflow options that will improve the final film/show.

No one disagrees that the film/show industry needs to produce the most and best content possible more efficiently, more quickly and more economically. But it must done in a way that retains the heart/soul, emotion and “feel “of a human film/show people can connect with.

Mind Games – A lot of people are using AI to create very real-looking content and the negative use of it will only continue but in the end, less than credible usage will only sour folks from watching the content and perhaps even cause rebellion against the creators.

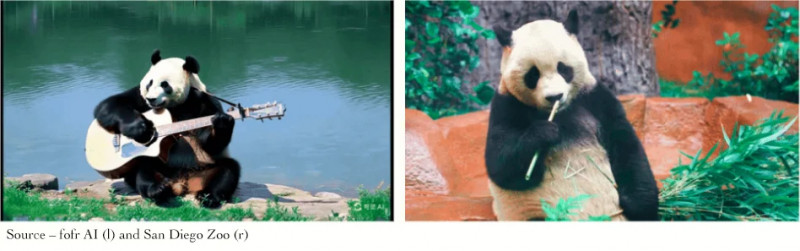

We were mildly impressed when China sent a panda to the San Diego Zoo with so much talent but then we were disappointed to find out the only thing they really did was eat bamboo and look cute.

It’s easy for AI hustlers to hype all of the great things the technology can do in content creation but few of them ever mention (and probably don’t think about it ) how real people are going to respond/react to the content.

A number of studio/streamer executives acknowledge that there will be a number of AI-generated films developed and released in the years ahead. However, as one noted, “There’s going to be such a volume of crap, and the vast majority of AI content is going to suck.”

We’re only beginning to see it. And a guitar-playing panda is just the beginning.

We agree that Sarandos (and other studio/streamer executives) are interested in having creatives use the tools that will make the content we watch better and along the way do it more economically.

But they also want shows/films that attract and hold the viewer–content that is truthful, authentic, and reeks of human emotion, connection.

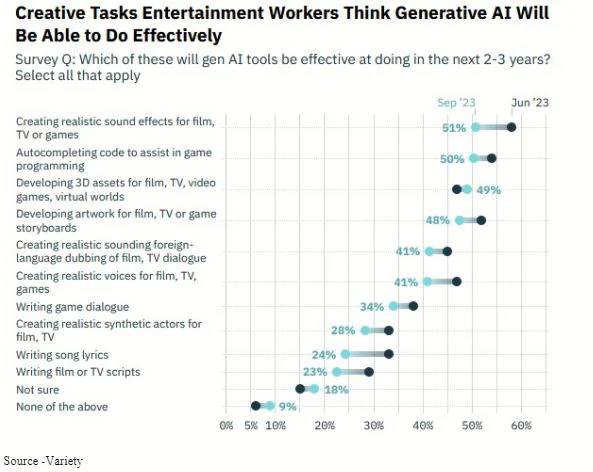

Effective – Professionals in the content creation arena are tracking, examining and trying creative AI “solutions” on an ongoing basis to determine which will really meet the industry’s needs and which are useless tools despite what technologists claim.

Open AI’s boss Sam Altman, folks with fortunes riding on AI’s futures and certain hypesters want to see the stuff used throughout the entertainment industry. Their pitch is that it will result in massive savings and profits.

But that’s plain BS!

All you end up with is massive computer power and sifting through volumes of data to produce more data.

Data doesn’t understand common sense, emotion.

Common sense says AI and LLM are tools.

Common sense says those tools will reduce/eliminate the work of some people.

Common sense says creatives – at every level – must develop the idea, guide the project and know when it’s time to end it to reach, touch, effect … people.

When that happens, the target audience will agree with Yeo Mi Ran in Love to Hate You when she said, “I was the one who wanted to end it, but I’m the one who can’t let go.”

That’s the kind of video stories people need to/want to create and watch.

The tools only help complete the cycle.